Businesses recognize that several factors play an important role in eliciting positive emotions about their brand. Who represents the brand and how can make or break your relationship with your customer base. Those that come across as more human and real should give off a more positive vibe to their customers. Given the chance, brands would benefit from analyzing how their representatives come across emotionally.

How can you do that? By using artificial intelligence, of course! We put our emotion recognition model to the test by analyzing how two entrepreneurs express their emotions on their Instagram profiles to their millions of followers.

Emotion recognition software and models

Aside from declaring your emotions verbally, there are other ways that we can let each other know how we’re feeling. Your muscles might spasm, you might start looking away, or start slouching. These are visual cues that give us a hint that you might be feeling a certain way.

AI has a long way to go before it can perfectly recognize emotions. People are still debating Ekman’s classic theory of emotions – which were used as a basis for future artificial intelligence and machine learning projects. The theory, in essence, states that there are six universal human emotions and that you can find them expressed similarly in different cultures. Critics argue that the current AI technology can only process exaggerated expressions and that their training sets are not diverse enough.

Despite these arguments, there is still merit in using AI to detect facial expressions. We’ll explain how you can use machine learning to extract emotions from images below.

How does emotion recognition work?

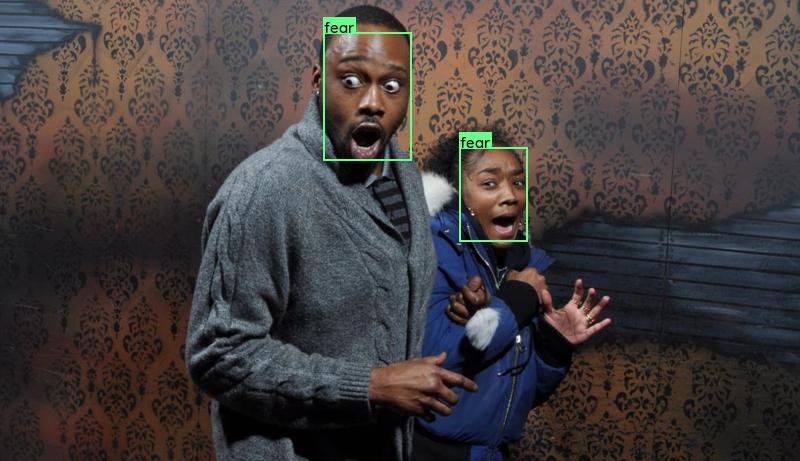

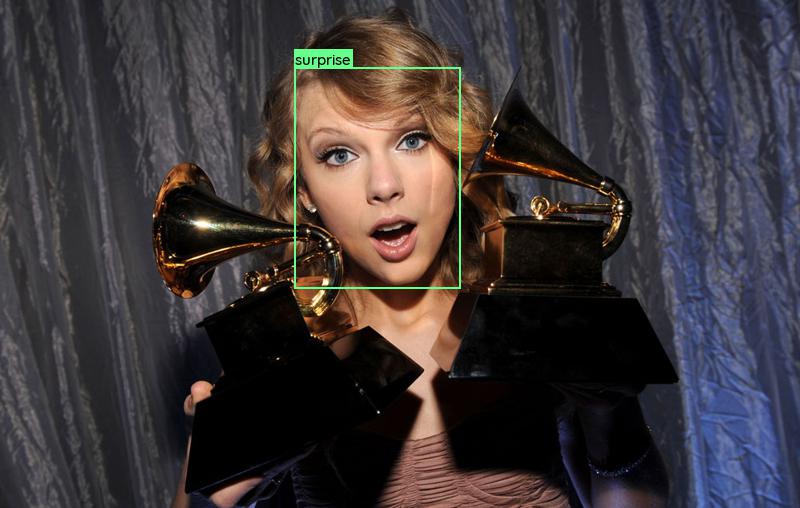

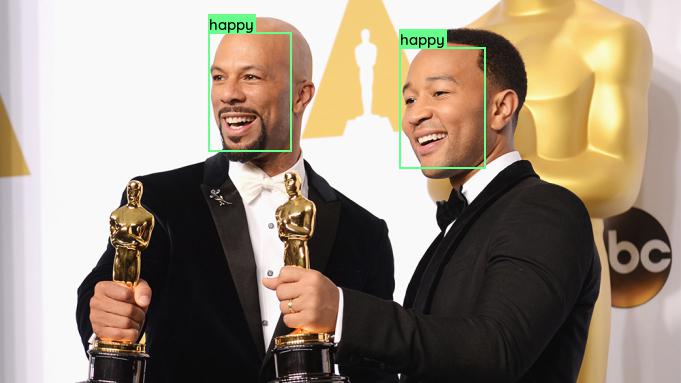

The AI will try to determine your emotional expression based on several factors such as the location of your eyebrows, eyes, and how your mouth is positioned. These models detect 8 emotions: neutral, happy, sad, surprise, fear, disgust, anger, and contempt in faces. In case you’re wondering, this is how some of those are classified:

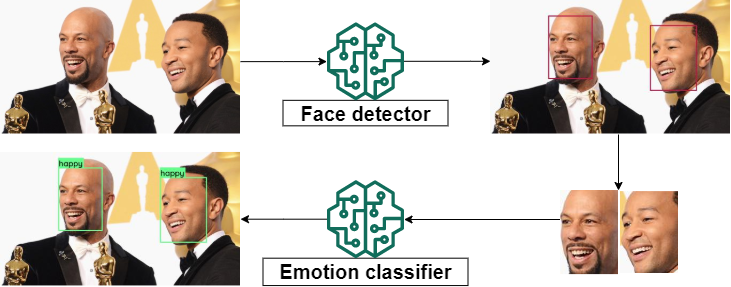

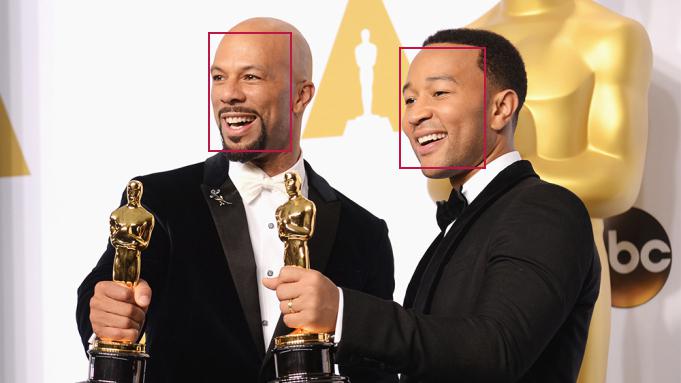

But thanks to our R&D team at Sotrender, we can break down how the emotion extractor works. The emotion extractor system includes two machine learning models: a face detector and emotion classifier model. Don’t worry, we’ve prepared a few examples to help illustrate this point.

How can Sotrender’s artificial intelligence models detect faces and emotions?

Most classical vision algorithms allow machine learning engineers to detect one face in an image. However, some solutions integrated several classical approaches to be able to recognize several faces and their emotions in a single image. In our case, we’re using machine learning approaches, which are data-driven, for this task. This is because our model uses state-of-the-art machine learning algorithms, namely MTCNN architecture to detect faces and ResNet50 architecture to classify emotions. How does Sotrender compare to the most advanced model?

When tested, the state-of-the-art accuracy on the AffectNet dataset is currently 59.58%, while our model’s accuracy scores at 57.1%. Upon first glance, neither of these screams “exceptional” to those that aren’t familiar with machine learning. Essentially, our model is comparable in accuracy to the state-of-the-art models that are currently available.

Visualizing the emotion recognition model

The process that these images undergo is actually fairly simple to understand when you visualize it. First off, we need an image with faces. This is the input image that we’re going to analyze.

The next step is to feed the input image into the face detector model.

So far so good! Now, the faces will be cropped out of the original image to become their own stand-alone images.

So far so good! Now, the faces will be cropped out of the original image to become their own stand-alone images.

And finally we’re at the emotion classification step. We’ll feed these images into the second model to try to recognize their emotions. The images will no longer be cropped, but instead be presented as the original image with a prediction.

Sotrender’s model can detect and recognize neutral, happy, sad, surprise, fear, disgust, anger, and contempt as emotions in images and videos.

To sum it up, the process looks like this:

What can we do with this method and what exactly does it tell you about your own company?

- We can quickly show you what emotions are present in your brand’s communication, whether it’s on social media, images, or videos.

- You would expect that most of your ads would include people who are glowing, flawless, and happy, so this part of the analysis would be extremely straightforward.

- Finally, you will find out how the “faces of your brand” are presenting themselves, and in turn, your brand. Whether these are influencers or the CEO of the company acting as brand ambassadors, their emotions will definitely influence your target audience.

We can always make adjustments to the models so they can fit social media data. Specific business propositions make it easier to create a dataset that our clients can benefit from. So don’t be a stranger and just drop us a line if you would like us to prepare something that fits your needs.

Emotional intelligence, leadership, entrepreneurship

Businesses are engaging in leadership discourse, and this discussion includes topics such as what qualities make a good leader, who should lead, and how. One topic that tends to reappear as a crucial trait in an effective leader is emotional intelligence. This is because emotional intelligence and being aware of what emotions you are communicating to others can improve your relations with your employees and regular customers.

Just as IQ is traditionally measured with ability tests, the same goes for emotional intelligence. Emotional intelligence is a set of abilities related to recognizing your own and others’ emotions, understanding the differences between these emotions, and knowing how to alter emotional states. There is some empirical support that indicates that emotional intelligence is related to positive work attitudes, altruism, and work outcomes. What’s more, other research has shown that the best-performing managers were rated as being more “emotionally competent”.

Businesses and customer bases are made up of people who have their own needs and feelings, so it makes sense that they have expectations about how others should be treated. It seems that the better the employees feel at a company, the better that company will be perceived. Otherwise, people will be incentivized to avoid the brand altogether.

We know that who represents your brand is sometimes almost important as the products themselves. Steve Jobs’ following would have told you the same thing. That’s why it’s crucial for the leaders to not just be involved in their business, but also be authentic with their audience. Still, most of them understand that they’re subject to scrutiny as much as anyone else if they misbehave publicly (whether intentionally or unintentionally).

Let’s take a look at how two well-known entrepreneurs present themselves and how their behavior is seen by others. In this article, you’ll see how machine learning can provide a better understanding of how and how often we display some emotional reactions. But first, we’ll give you a short intro to both of them.

Richard Branson (Virgin Group)

Richard Branson has been active in many of his companies’ promotional campaigns and PR. Most of his acts and performances were seen positively, such as trying to publicize his wedding and bridalwear store by putting on a dress and makeup. Though not all of his antics would necessarily come across as great in 2020 – specifically, dressing up as a Zulu warrior.

Generally, Branson tends to come across as someone who is genuine and cares about others. He frequently participates in charities, even pledging $3 billion to fighting climate change and cooperating with educational charities across Africa. Even the way he speaks of his employees is something that gets him recognition, as presented in these two quotes:

I have always believed that the way you treat your employees is the way they will treat your customers, and that people flourish when they are praised.

Train people well enough so they can leave, treat them well enough so they don’t want to.

Given his care-free demeanor and confidence, we wouldn’t be surprised to see a happy Branson on social media.

Elon Musk

In 2015, Musk was seen as a “brand champion” that other leaders should pay close attention to. He used to be able to assure their fans and consumers that despite some incidents, their cars and technology were generally safer than most of their competitors’ products. Even the owner of the Model S that caught on fire in 2013 was satisfied with Tesla’s response to the incident. It seems that public perception of Musk was generally positive at the time.

It seems like quite the contrast in 2020, as Musk is appearing to be more himself.

Consider how Elon Musk was perceived after he stated that the Coronavirus panic is “dumb” and after he announced that Tesla would reopen at the end of May. As you might have guessed, it wasn’t a great moment. It’s just not the right thing to post on social media during a pandemic. The workers’ group stated that “They need to prove they really care about their workers.” It definitely doesn’t help that neither Tesla nor Elon Musk said anything to provide evidence for adequate testing and worker conditions.

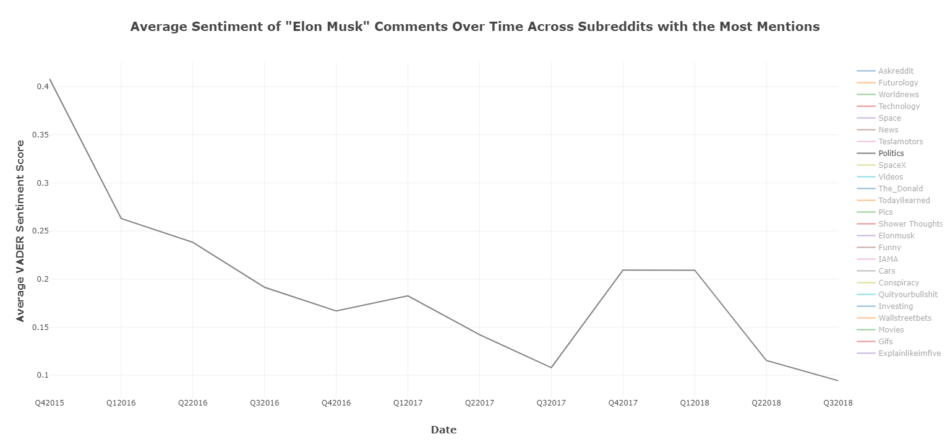

Here’s a graph that illustrates the sentiment of user comments about Elon Musk over time on Reddit, thanks to the user anthonytxie.

Analyzing Elon Musk’s emotions in pictures on social media

We took 130 images from Musk’s Instagram profile. We ended up analyzing only 76 images where his face appeared using our emotion extractor model. After that, we simply calculated the percentages of the different emotions that came up in our analysis. Here’s how often each emotion was portrayed:

Are you surprised that the man who wants to create a colony on Mars comes across as neutral in 56% of the images we analyzed? The model only detected happiness in 16% of the images we worked with. Interestingly, he only appeared to be expressing fear in 1% of the images. Given what he posts on his other social media profiles, that one isn’t as surprising.

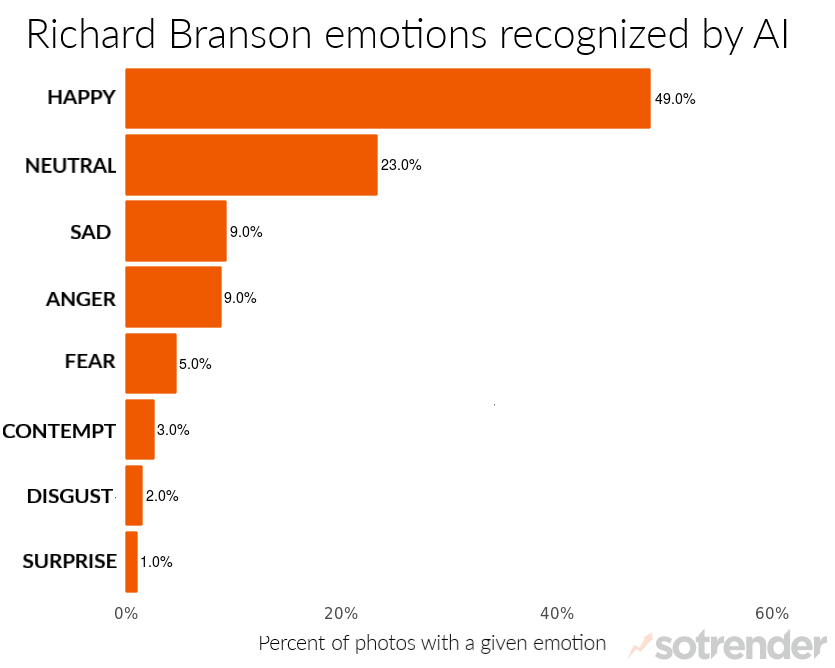

Analyzing Richard Branson’s emotions in pictures on social media

As it turns out, Branson appears to be happy in almost half of his social media images. We followed the same method as with Musk, so we initially ran 250 pictures from Branson’s Instagram.

Besides appearing happy, he showed other negative emotions more often than Musk did:

- Sadness 9% of the time vs. 6%

- Anger 9% of the time vs. 4%

- Fear 5% of the time vs. 1%

It’s interesting though, that Musk appeared to be more surprised than Branson since Branson was surprised in only 1% of all of the photos he was in.

Based on these analyses, we can see that the two entrepreneurs differ in how they portray themselves on social media.

What about emotion recognition in videos?

So by now, you should understand how we manage to analyze individual, still images. We can analyze videos in a few different ways. The first way would be to analyze each individual frame, so it’s going to be treated as a separate image. The alternative method would be to extract and analyze only the most important frames in the video (keyframes). But remember, we will still be able to analyze all of the frames together to provide you with specific results about facial expressions that appeared in the whole video.

So here’s a video of emotion recognition in action.

The challenges of emotion recognition

As with all developing technology, there are some imperfections when dealing with this type of data. For one, real people need to label data from a dataset, and these people can read emotions in different ways. This results in noisy data. Essentially, inter-rater reliability could be low. For example, there is a difference between detecting raised eyebrows as shock compared to happiness. A psychology professor at Northeastern University noted that determining emotions based on a few visual cues isn’t the same thing. Specifically, although one action can indicate anger, so can a few other subtle cues that aren’t as expressive/obvious.

Another issue is detecting emotions in people of color. Unfortunately, some models detect more anger in black men than white men. This could suggest that training sets should become more diverse to learn how to recognize other faces. Experts in the field are doing their best to fix this.

The hope is that we will eventually include more metrics that will allow for a more nuanced understanding of emotion recognition. For example, eye-tracking or voice recognition could be a good starting point, according to researchers from the Max Planck Institute for Informatics.

How does the face of your brand come across to others?

Being aware of your own and others’ emotions is an important component of being an effective leader and being perceived as such. If you don’t realize that you’re coming across as indifferent, moody, or uncaring about others, it has the potential to hurt your business and employees. It would benefit your brand if you know to what extent you present different emotions across your communication, including both paid and organic communication. More specifically, it’s harder to advertise your company and associate it with positivity if the emotions that are associated with your brand ambassador are negative or indifferent.

Emotion recognition has come a long way and will continue to improve with time. The more diverse, high-quality datasets we acquire, the better our predictions will become. It doesn’t just have to be about detecting emotions in commercials! Actually, it’s worth analyzing industry giants from time to time.

When it comes to using our emotion recognition model, Musk and Branson make for interesting case studies because they’re such strong personalities that carry themselves differently. Musk comes across as more neutral in his social media appearances, while Branson comes across as happier. We can’t say that there is a definitive cause-and-effect relationship between their social media presentation and whether their company profits are going up. Still, the face of your company influences how people perceive your business.

Would you like to try out our emotion recognition model and see how it can be used for your business? Feel free to leave your contact information in the form below and we’ll get right back to you! 😉