The COVID-19 pandemic has obviously had a tremendous impact on the way we consume media and interact on social media. We can’t scroll through our feeds without coming across an article, opinion, or video that is somehow related to the pandemic. But with so much content, we need to be careful about what we believe and share.

In this article, we’ll briefly discuss how fake news, trolls, and bots are impacting social media during the pandemic.

Social media is generally used for the sake of interacting with other people in a light-hearted way. Even people who use their real social media profiles to troll others don’t tend to go overboard when their identity is known.

We’ve previously discussed how social media can be a tool in hybrid warfare, which is still happening today. In short, troll farms use tactics to spread propaganda on the internet.

Twitter and Instagram are two social media platforms that are currently doing what they can to detect strange behavior. This could include mass following and unfollowing of other users, posting a lot of content at once, or consistently using banned hashtags. Unfortunately, as bots become better at mimicking people and using language in a natural way, it will be difficult to stop propaganda from becoming a trending topic and taking over more space on social media.

Coronavirus discourse: Bot or not?

Bots by definition aren’t real people. They make someone believe they are interacting with a real person when they’re actually talking to a non-existent being. Bots can use hashtags and post content that can ultimately influence the algorithm of different social media platforms.

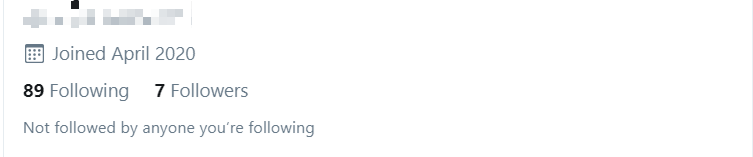

You might be surprised to learn that almost half of the Twitter accounts tweeting about COVID-19 are bots, according to researchers from Carnegie Mellon University. It can be difficult to discern a troll or a bot from a real person, but there are some signs that can indicate whether the discussion you’re having online is real.

Here are a few things you can watch out for:

- Was the account made recently?

- Do they have a bio/caption? Is the caption filled with only hashtags?

- How many followers do they have? Do the accounts mutually follow each other?

- How often do they post content? Are there times when they don’t post at all?

Recently, Bot Sentinel analysis had spotted bots and trolls attempting to influence lockdown and quarantine protests with hashtags like #ReopenAmericaNow and #StopTheMadness on Twitter. What was the content of their posts? Here’s a short rundown:

- Political parties want to prevent people from voting

- Someone is trying to hurt the economy and make the US president look bad

- Inaccurate statistics about the virus mortality rate

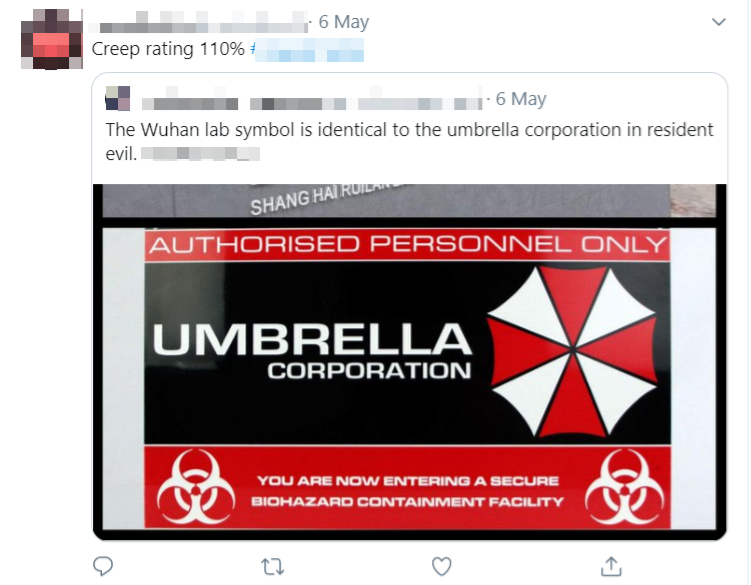

According to the researchers at Carnegie Mellon University there are also tweets about other conspiracy theories. These include topics such as the 5G towers making people more susceptible to catch the virus, or how hospitals are filled with mannequins.

Bot Sentinel suggests that there is more damaging content on Twitter than we may realize. However, a Twitter spokesperson disputed the claims made by Bot Sentinel, saying that they’re simply prioritizing content with calls-to-action that can cause physical harm.

So for example, the tweet below won’t be taken down because it doesn’t directly tell the readers to do something. Even if the tweet is attempting to instill hostility, it technically might not breach Twitter’s Terms of Service.

We need to remember that troll farms exist. These are essentially people who could be paid to push political agendas by spreading disinformation. Don’t confuse them with bots, though.

Canada’s Chief of Defence said that there are already signs that people are trying to take advantage of uncertainty and fear online. Even Bill Gates is being constantly harassed by trolls online who have hidden their identity. He’s regularly accused of attempting to microchip the population by endorsing the creation of a COVID-19 vaccine. At the moment, Russian and Chinese “troll farms” and officials are being accused of spreading various conspiracy theories.

Social media platforms and fact-checking

We showed you how disinformation can be spread on social media, now we’ll show you how social media platforms handle it.

Bots, trolls, and real-life uninformed people who post about the conspiracy have different intentions. Still, their posts are being taken down on social media because they have the potential to do significant damage. Several news outlets such as The Hill have reported that some states had a spike in cases shortly after the protests.

What’s more, there are other casualties and damages being done because of the spread of disinformation. For example, security guards and store clerks are being physically assaulted because they’re informing their patrons that they must wear a mask to shop at their location. These are just a few examples of the severe consequences that social media platforms and organizations are trying to prevent in a variety of ways.

YouTube

According to Statista in 2019, over 500 hours of video content were uploaded to YouTube per minute. It’s clearly a platform with an abundance of content. But how do they manage to prevent users from spreading harmful or inaccurate content?

In YouTube’s case, they’ve regularly spread out CTAs related to COVID-19 on both:

your homepage…

…and underneath videos that you’re watching.

…and underneath videos that you’re watching.

What’s more, interviews and media networks’ financial affiliations are made clear underneath the videos for transparency. These are small things that eventually make it easier to prevent disinformation from being spread to a wider audience.

What’s more, interviews and media networks’ financial affiliations are made clear underneath the videos for transparency. These are small things that eventually make it easier to prevent disinformation from being spread to a wider audience.

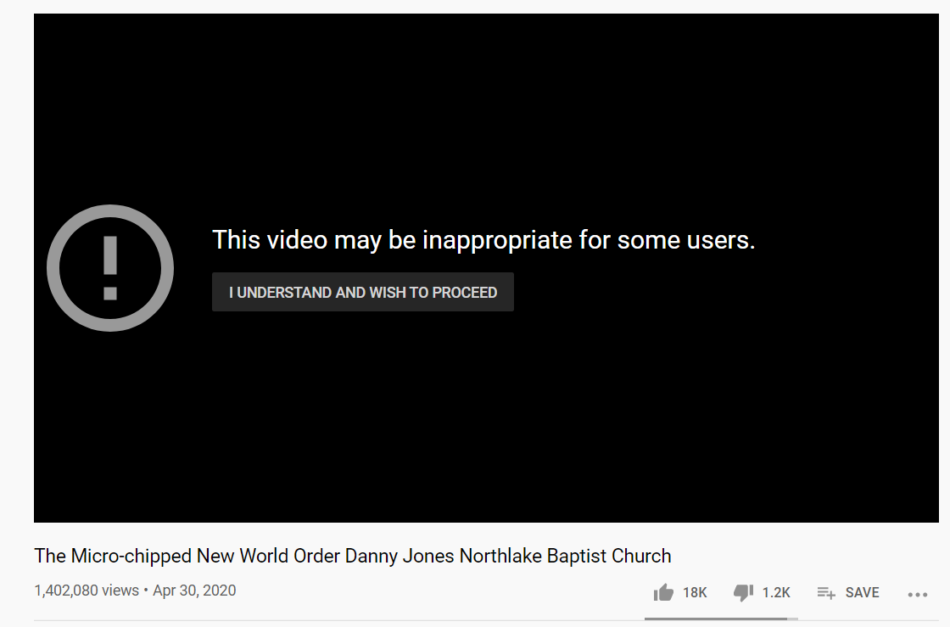

Some videos were completely removed from YouTube due to the amount of unverified information.

Twitter and Facebook

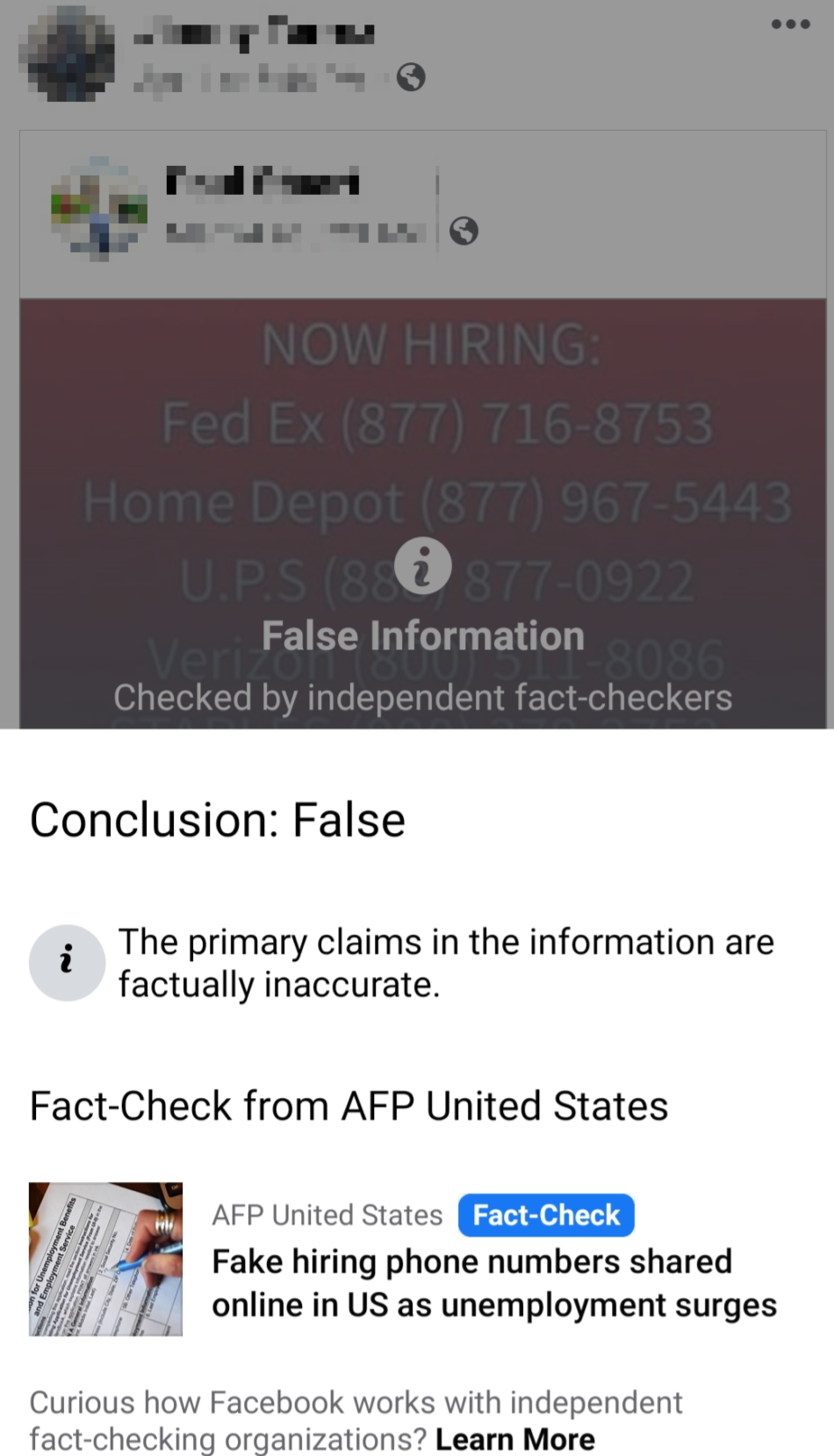

It’s also worth remembering that Twitter is finally going to put up information about which COVID-19 tweets are fake news. Apparently, it will be similar to how Facebook has been labeling false information on its platform:

This is the real test. https://t.co/BqlQt3Lj9i

— Donie O’Sullivan (@donie) May 11, 2020

Here’s Facebook for a comparison:

Facebook is taking down content that encourages anti-quarantine activities. So if you’ve seen content that promotes not wearing a mask, going to the beach, or about how doctors are lying, it’s likely that it was taken down soon after. Facebook has done this rather well since they provide the exact source where they got their information from. That way, you can learn more about how they check facts.

How organizations can fight back against disinformation

Now that you’re aware of the intensity of the spread of disinformation, let’s take a look at how organizations and institutions are fighting back.

Warsaw’s own POLIN museum had to fight back against attacks in 2018 when they opened another exhibition. Through our cooperation, they were able to get some insight into what was going on. Here’s what their Deputy Marketing Manager had to say about the situation:

In 2018, when the POLIN Museum of the History of Polish Jews opened an exhibition about the anti-Semitic witch-hunt in Poland in March 1968, there were intensified attacks on the Museum in social media. The trolling analysis carried out at the time showed, among others, the operation of groups of profiles publishing exactly the same content in different places on Facebook at the same time. In addition to content directed against the museum, those profiles also often published content that showed Germany and the European Union in a bad light.

Małgorzata Zając, Deputy Marketing Manager

Meanwhile, UNESCO is an organization that was assembled by the United Nations and is currently teamed up with WHO. They endorse peace by having countries cooperate across the fields of education, science, and culture. Since they’re concerned with promoting science, they regularly share fact-checked information from reputable sources. What’s more, they’re encouraging their followers to be skeptical about information from unreliable sources. Here are a few of the hashtags that they’ve been using to promote this message on Twitter:

- #DontGoViral

- #ShareKnowledge

- #ThinkBeforeClicking

And here are a few examples of their social media posts that promote media literacy:

?Not all ‘experts’ online are trustworthy, be aware of the existence of false experts!

This thread is part of @unescobangkok’s campaign on disinformation, in collaboration with UNESCO Myanmar Office & @UNinMyanmar.

Infographic by @MILCLICKS #ThinkBeforeSharing #DontGoViral pic.twitter.com/GsuUjbUkhr

— UNESCO Bangkok (@unescobangkok) May 16, 2020

❓How to distinguish facts from opinions in a news article?

There are some typical key words that denote facts, such as demonstrate, statistics, references etc. #MILCLICKS #ThinkBeforeClicking #COVID19 pic.twitter.com/DrgtiOr4fd

— UNESCO MILCLICKS (@MILCLICKS) May 13, 2020

The Center for Disease Control and Prevention also regularly posts on their Facebook Page in a similar manner.

If you open the comments on the CDC’s posts, you’ll notice a mix of responses. Most responses are supportive or asking questions. However, there are some comments that definitely require moderation or tending to.

There are a few users whose profiles seem like bots for a few reasons. For example, they don’t respond to their mentions in a natural way. Instead, it seems that they are more or less repeating mantras or quotes out of context. Some of them even link to viral conspiracy theory videos in a spammy way, which is a good enough reason to delete or hide the comment, actually.

At some point, there might be more than a few intensely negative comments. If you start to see a flurry of incoming comments that just disparage you and your credibility, there’s a chance that you’re a victim of a planned attack. But you won’t be able to know unless you look into it on a deeper level.

Analysis of fake accounts and activities

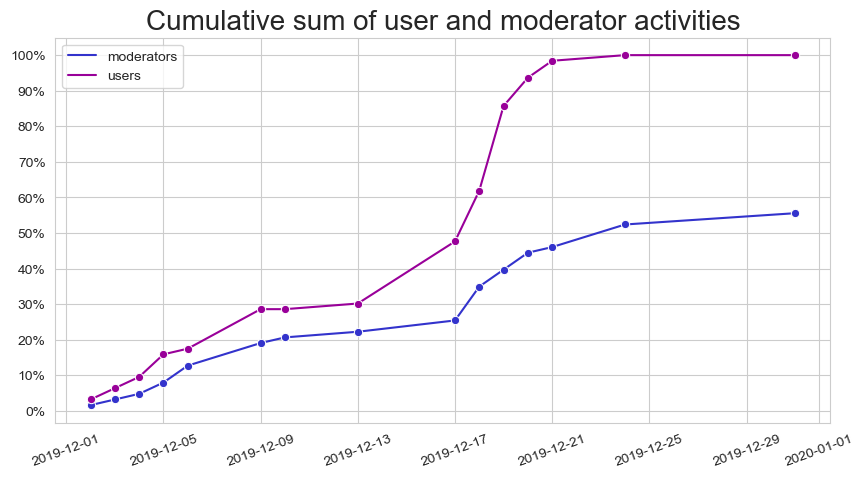

It’s important to be aware of the current situation that your brand or institution is in. This is where Sotrender’s report on activities would help you:

- Figure out if trolls and fake accounts are posting anything on your profile

- Learn if you were a victim of a coordinated attack

- How much impact this had on your online presence and if you should take further action

Essentially, you will be able to plan content that will improve your image and you will be better prepared to handle potential crises in the future.

If you’re interested in this type of report, feel free to contact our Sales Team to get more information. 😉

Comment moderation

If you’re running a Facebook Page that acquires a lot of engagement, it’s worth paying attention to what users are saying. It’s difficult to sift through all of the comments on all of your posts, especially to narrow down on the negative ones. Unfortunately, Facebook doesn’t allow you to filter your comments according to social sentiment. However, you have other options.

Sotrender’s machine learning-powered social inbox allows you to connect your Facebook profile and manage your comments and messages from one tab. The feature offers a variety of functions to make your social media management tasks easier.

- You will save time looking for negative comments by using the social sentiment filters

- Keep all comment history from the moment of activation, meaning you will know if a user has a history of leaving disparaging comments

- A report detailing the effectiveness of the interactions between you and the users on your Page. No statistics background necessary!

Learning how to prevent crises and manage comments on your own is an important component of managing a social media profile. That’s why we recommend you learn how to do it efficiently and use a tool that will make it all easier.

Learning how to prevent crises and manage comments on your own is an important component of managing a social media profile. That’s why we recommend you learn how to do it efficiently and use a tool that will make it all easier.

Stay safe and share knowledge online

We’ve gone over a lot of information, so here are the takeaways:

- Dissemination of unverified information happens regularly

- Social media platforms are starting to recognize the real dangers of not monitoring content

- Organizations are using social media to combat misconceptions and purposeful deception

- It’s crucial that you prepare for any cyber-attacks and know what to expect

Hopefully, you now know how it’s possible to fight back against these issues. Finally, we do recommend that you take some time away from social media. Being exposed to damaging content regularly can definitely take a toll on you, so do your best to be responsible and to take care of yourself!